In previous Hive tutorials we have looked at installing and configuring Hive, data modeling and use of partitions to improve query response time. For a review of these concepts please refer to learn how to set up Hive, creating effective data models in Hive and use of partitioning tutorials. A good level of understanding of those concepts is assumed in this tutorial. In this tutorial we will focus on writing queries that get answers from your data.

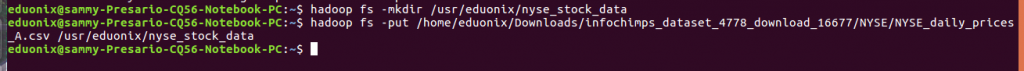

Data on New York Stock Exchange for the years 2000 and 2001 will be used to demonstrate concepts in this tutorial. It is available for download from https://s3.amazonaws.com/hw-sandbox/tutorial1/infochimps_dataset_4778_download_16677-csv.zip. Unzip the data, create a directory in HDFS and move it there.

hadoop fs -mkdir /usr/eduonix/nyse_stock_data hadoop fs -put /home/eduonix/Downloads/infochimps_dataset_4778_download_16677/NYSE/NYSE_daily_prices_A.csv /usr/eduonix/nyse_stock_data

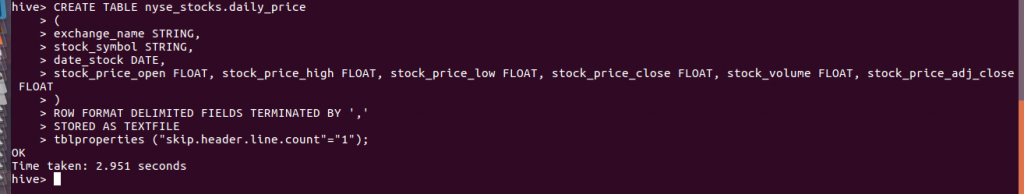

We need to create a table in Hive to store our stock data. The command below does that.

CREATE DATABASE nyse_stocks;

CREATE TABLE nyse_stocks.daily_price

(

exchange_name STRING,

stock_symbol STRING,

date_stock DATE,

stock_price_open FLOAT, stock_price_high FLOAT, stock_price_low FLOAT, stock_price_close FLOAT, stock_volume FLOAT, stock_price_adj_close FLOAT

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFILE

tblproperties ("skip.header.line.count"="1");

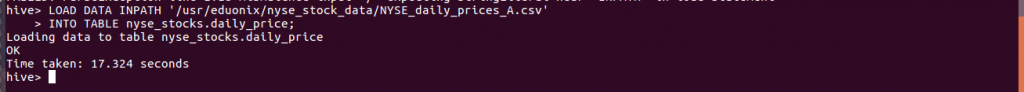

Once our table has been created we load data into it using the command below.

LOAD DATA INPATH '/usr/eduonix/nyse_stock_data/NYSE_daily_prices_A.csv INTO TABLE nyse_stocks.daily_price;

Load dividends data into HDFS using the command below.

hadoop fs -put /home/eduonix/Downloads/infochimps_dataset_4778_download_16677/NYSE/NYSE_dividends_A.csv /usr/eduonix/nyse_stock_data

![]()

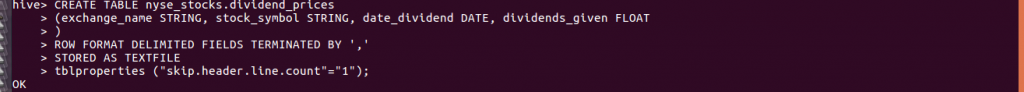

We also create a table for dividends data and load it. The commands below do that.

CREATE TABLE nyse_stocks.dividend_prices

(exchange_name STRING, stock_symbol STRING, date_dividend DATE, dividends_given FLOAT

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFILE

tblproperties ("skip.header.line.count"="1");

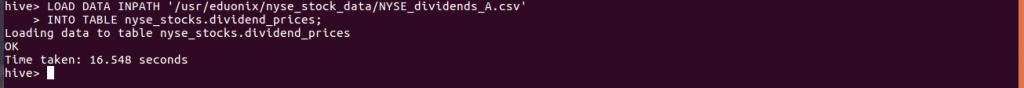

To load the data the command below is used.

LOAD DATA INPATH '/usr/eduonix/nyse_stock_data/NYSE_dividends_A.csv' INTO TABLE nyse_stocks.dividend_prices;

Hive provides a syntax that is very similar to SQL for data querying. The SELECT statement is the main query that is used to interact with stored data. At its very basic level to use select statement you specify the columns and the table from which you would like to select data. You can use a wild card or specify the columns to return although the former technique is not advisable. Query examples are shown below.

SELECT * FROM nyse_stocks.daily_price; SELECT stock_symbol, stock_price_open, nyse_stocks.daily_price;

The queries shown above are not very meaningful because they return all the data without any way of limiting the data to be returned. The WHERE clause offers a way of limiting data returned by specifying conditions that need to met. Conditions can be specified using predicate operators, logical operators and functions. These are the standard operators available in programming languages and SQL. Functions for working with dates, strings, mathematical operations, aggregations and text analytics are available. These functions and operators are very extensive so the reader is referred to HQL documentation for an exhaustive discussion. These operators and functions can be combined to create multiple criteria as desired. For example the AND operator can be used to specify two conditions that need to be true so that records can be returned. The OR operator can be used specify two conditions where either needs to be true for records to be returned.To demonstrate how these are used examples are given below. For example to return records where the closing price of a stock is greater than the opening price we would use the query shown below.

SELECT stock_symbol, stock_price_open FROM nyse_stocks.daily_price WHERE stock_price_close > stock_price_open;

To group results using a specific column and apply an aggregate function on each group you use the GROUP BY clause. You can specify a column that already exists in data schema or use a function to compute a key that will be used to group data. Aggregate functions that can be used include AVG, COUNT, GROUP_CONCAT, MAX, MIN, NDV, STDDEV, SUM, VARIANCE and user defined functions. When there are null values present in the data they are excluded when aggregate functions are applied. For example when using an AVG aggregate function null values will not be considered in calculation of the average. Examples of how aggregate functions are used are illustrated in the section below.

To get the average of the opening price of stocks you use the query below

SELECT AVG( stock_price_open) FROM nyse_stocks.daily_price;

To get the maximum price of the closing price stock where the closing price of stock was less than opening price of stock the query below would be used.

SELECT MAX(stock_price_open) FROM nyse_stocks.daily_price WHERE stock_price_close < stock_price_open;

To count the number of records in each group of stock symbol we use an aggregate function COUNT and a GROUP BY clause as shown in the query below.

SELECT stock_symbol, COUNT (1) FROM nyse_stocks.daily_price GROUP BY stock_symbol;

To operate further on groups created by the GROUP BY clause by applying operators you use the HAVING clause.

To sort records using a specific column in ascending or descending order you use the ORDER BY clause. By default records are ordered in ascending order so when you intend to sort in ascending you don’t need to specify any option. You can specify one or multiple columns that will be used to sort the data.

An example of use of order by where records are sorted in descending order is shown below

SELECT stock_symbol, stock_price_open FROM nyse_stocks.daily_price WHERE stock_price_close > stock_price_open ORDER BY stock_price_open DESC;

Querying data from two tables is supported in Hive by use of a join similary to how it is done in relational databases. However there are differences in the way they are supported that you need to be aware of. In Hive the only types of joins supported are equality joins, outer joins and left semi joins. The restriction to use only equality joins arises due to complexity of defining a non equality join as a mapreduce job. In this tutorial we loaded two data sets on stocks and dividends. We would now be interested in querying the two tables. As an example we select the opening stock price and dividends as shown below

SELECT nyse_stocks.daily_price.stock_price_open, nyse_stocks.dividend_prices.dividends_given FROM nyse_stocks.daily_price JOIN nyse_stocks.dividend_prices ON ( nyse_stocks.daily_price.stock_symbol = nyse_stocks.dividend_prices.stock_symbol);

when doing table joins and you realize one of the tables is small enough to fit in memory a map join will give better performance as compared to a regular join. Hive automatically decides if to use a map join when hive.auto.convert.join is set to true via hive-site.xml configuration file or from the Hive shell.

This tutorial explored the most useful and commonly used Hive queries. Creating tables and loading data was discussed. Querying all or specific columns was discussed. The use of a where clause to filter results that are returned was discussed. Grouping and sorting results was discussed. Finally using joins to query two tables was discussed.