Learn How To Process Stream Data In Real Time Using Apache Storm Part-1

Apache Storm is a top level hadoop project that has been developed to enable processing of very large stream data that arrives very fast...

Learn How to Query, Summarize and Analyze Data using Apache Hive

Apache Hive is project within the Hadoop ecosystem that provides data warehouse capabilities. It was not designed for processing OLTP workloads. It has features...

Improving business performance using Hadoop for unstructured data

Hadoop Hadoop everywhere!

Companies like Microsoft, IBM and Oracle are building business solutions for unstructured data analysis similar to Apache Hadoop (used for complex data sets)...

How Data Science Can Evolve Over the Next Decade?

Today, we are living in a world where gadgets have eventually gained popularity and with the passage of time are becoming capable to transmit...

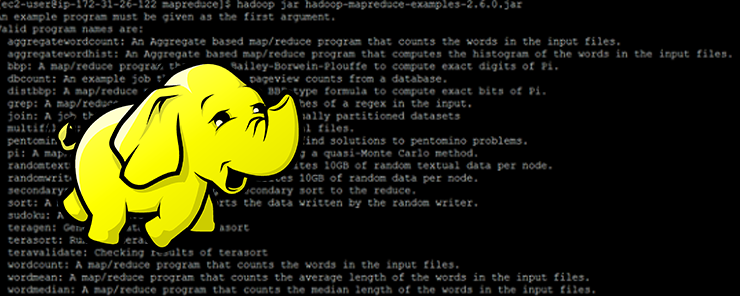

Running a MapReduce Program on Amazon EC2 Hadoop Cluster with YARN

As in the previous guide we configured Hadoop cluster with YARN on Amazon EC2 instance. Now we will run a simple MapReduce Program on...

What Makes Data Cleaning so Essential?

Data Science, a field about which every geek, businessman, entrepreneur, programmer, and visionaries are talking about. When you will go to Google and search...

R Programming Series: Clustering using FactoExtra Package

In this series, we have learned about Dynamic Map creation using ggmap and R, creating dynamic maps using ggplot2, 3D Visualization in R, Data...

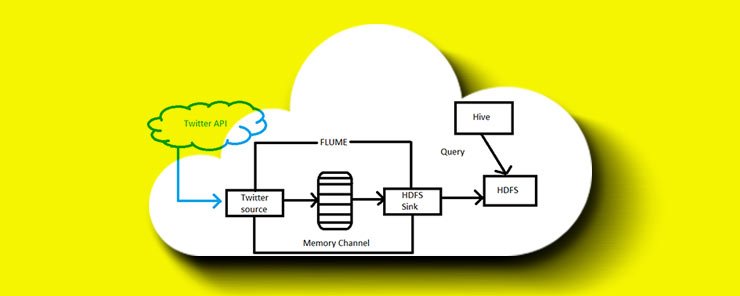

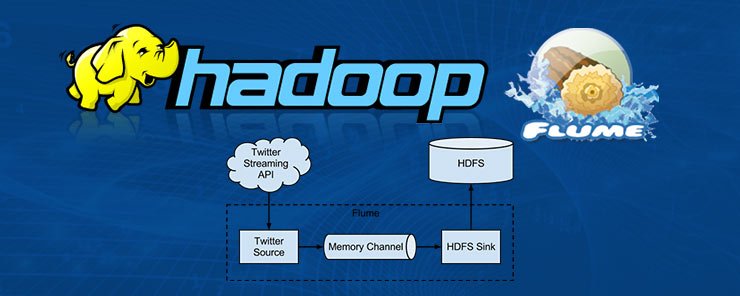

Learn how to stream data into Hadoop using Apache Flume

Apache Flume is a tool in the Hadoop ecosystem that provides capabilities for efficiently collecting, aggregating and bringing in large amounts of data into...

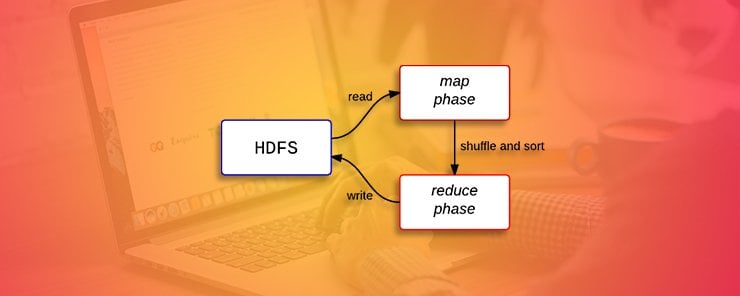

Learn about the MapReduce framework for data processing

The philosophy behind the MapReduce framework is to break processing into a map and a reduce phase. For each phase the programmer chooses a...

Hadoop Project on NCDC ( National Climate Data Center – NOAA ) Dataset

NOAA's National Climatic Data Center (NCDC) is responsible for preserving, monitoring, assessing, and providing public access to weather data.

NCDC provides access to daily data from...