Ensemble learning is a machine learning approach that has become widely popular in recent times. Its popularity stems from its widespread usage in machine learning competitions (such as the ones on Kaggle), and also its extensive usage in many practical machine learning applications. Ensemble learning is one approach that you should know if you plan on using machine learning in any form. Although ensemble learning is an approach practically limited only by your imagination, we’ll take look at some popular ensemble methods in this article.

What Are Ensemble Methods?

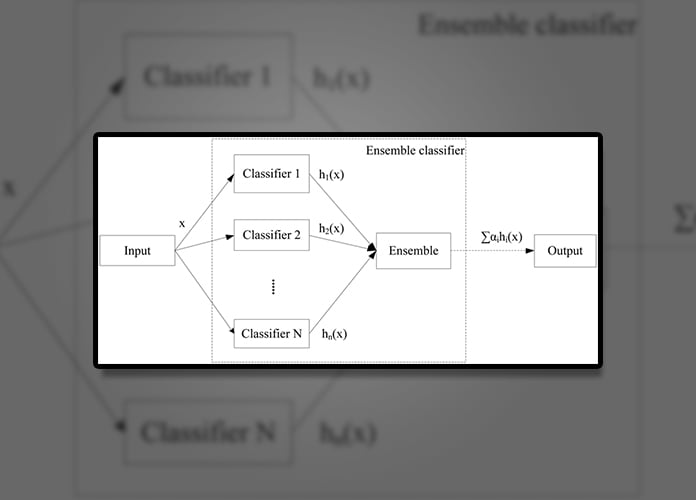

The idea behind ensemble learning is pretty intuitive. A good machine learning model should be able to perform well on unseen data. However, the ordinary machine learning methods learn just one hypothesis from the training data. Different methods produce different hypotheses, but none of them may be good enough. Hence, it makes more sense to learn many hypotheses and combine them to form one good hypothesis to achieve better results on unseen data. That’s exactly what ensemble learning aims to do. In ensemble learning, instead of training just one model, you train many models on the data and use the combined hypothesis of all the individual hypotheses as your final hypothesis. The models which are combined are called base learners.

Any machine learning model has two main properties that we’re interested in. The bias error, and the variance error. The bias error of a model quantifies how poorly a model predicts the correct values. A model with a high bias error is likely to underperform and have a lesser accuracy in making predictions. Such models are said to underfit the data. On the other hand, the variance quantifies the difference in the predictions made on similar data. A model having a high variance is likely to perform extremely well on the training data but doesn’t perform well on unseen data. It is said to overfit the data. Bias and Variance are usually correlated and decreasing either one of them results in an increase in the other.

Any good machine learning model must maintain the optimal balance between bias and variance. A model with a single hypothesis more prone to having either a high bias or variance and it’s usually not possible to minimize both at the same time. Hence, you can try to achieve optimal performance by using ensemble learners, which can better achieve the balance between bias and variance.

How To Construct An Ensemble Learner?

To construct an ensemble learner, you have to first create the base learners to be combined. This can be done in a sequential or parallel way. In a sequential construction, the generation of one learner affects the generation of a subsequent learner. In a parallel construction, all the base learners are independently generated. The base learners can be any of the ordinary supervised machine learning models such as neural networks, logistic regression, decision trees, etc.

After creating the base learners, you have to decide on a way to combine these learners. There are many combination methodologies, and each different method of combination leads to a different learner. The general approach towards creating the base learners should be good precision in the individual learners and maximum diversification across the learners as a whole. Mathematically, this makes the final model the most accurate. Deciding the number of base learners to be generated is a tricky task and the optimal number usually varies depending on the task at hand, and on the type of data available. With a little practice, you can start figuring out the exact architecture of the best ensemble learner.

Popular Ensemble Learning Methods

As has been mentioned before, there is no one particular way to construct ensemble learners. However, there are many popular tried and tested methods that work particularly well. We’ll discuss some of them here.

Bagging

Bagging is short for bootstrap aggregating. In this approach, given the dataset, you first create a number of samples from it using random sampling (with replacement). These sample datasets are called bootstrap samples. As you can guess from the name, the goal here is to aggregate the learners trained on these samples. We create base learners and train them on all the samples on a common learning algorithm. If it’s a regression task, we average the outputs from all the base learners and use it as our final prediction. On the other hand, if it’s a classification problem, we use voting to determine the predicted class, that is, we take the predicted class as the one which majority of the base learners predicted. Bagging reduces the variance of the learners and hence is most effective when the base learners have high variance.

Random Forests

Random Forest algorithms are basically just bagging applied to decision trees, but with one small change. While training on the samples, it chooses only a subset of the features in the data instead of all of them. This is done to nullify the effects of correlation between features in the trees. But this small change results in significant improvements in performance, mainly because features are usually strongly correlated in decision trees. Hence, it is advisable to use random forests when working with decision trees.

Boosting

Boosting is an iterative procedure involving a sequential form of ensemble construction used in classification tasks. In boosting, the base learners are added one after another to the ensemble, with each subsequent model placing more emphasis on the data which it misclassified.

A popular example of the boosting approach is the AdaBoost algorithm, which places equal weights on all the training examples initially and keeps increasing the weights of the examples that were misclassified. By this method, the ensemble eventually reduces the number of misclassifications. This method is used to reduce the bias in the model.

Stacking

Stacking is a variant of ensemble learning wherein a number of models are trained on the data using different algorithms, and all of them are them combined to make a prediction. Bagging and Boosting differ from stacking, in that they use the same algorithm to train the base learners but stacking uses a diverse set of algorithms to do the same. Stacking is usually a very good approach and can easily achieve optimal performance.

We’ve looked at the popular ensemble learning algorithms in this article. As has been mentioned before, ensemble learning is not a fixed approach to machine learning and can be practically used to create any method of training machine learning models. It can be said that the ensemble approach is more of an art than a fixed set of ideas and no one can whip up an optimal algorithm on the first try. However, with enough practice, you can master the ensemble learning approach, and once you do, the applications are endless.