The previous Kubernetes tutorials focused on demonstrating how to install and create set up files. This tutorial will demonstrate how to perform common tasks that optimize a Kubernetes cluster and ensure it runs smoothly. To complete this tutorial you need access to a Kubernetes cluster. Alternatively you can install minikube which avails a single node cluster on a local machine. Because of the simplicity of setting up minikube it will be used in this tutorial.

We need to enable and ensure heapster is running. Heapster is responsible for cluster monitoring and performance. The commands below enable and check the status of heapster.

minikube start minikube addons enable heapster kubectl get services –namespace=kube-system

To decouple the exercises in this tutorial from the rest of the cluster, we need to create a namespace using the command shown below

kubectl create namespace tutorial-exercises

The memory used by a container is controlled through request and limit flags. The maximum resources that can be used by a container are specified in its manifest. A sample container manifest demonstrating setting of resource limits is shown below.

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo-ctr

image: vish/stress

resources:

limits:

memory: "160Mi"

requests:

memory: "80Mi"

args:

- -mem-total

- 150Mi

- -mem-alloc-size

- 10Mi

- -mem-alloc-sleep

- 1s

In the manifest above, the limit is set to 160MB and the request is set to 80MB. When the node where the container is running has sufficient memory the request can be exceeded but exceeding the limit is not allowed. In the event of continually exceeding limit a container is terminated but it can be restarted.

The total memory requests and limits of containers in a pod make up the pod request and limit respectively. Before a pod is scheduled, a node must have adequate memory to meet the needs of the pod. When pod memory is not satisfied the pod will be trapped in a PENDING status indefinitely. When there are no resource limits specified, a container will use the entire memory available on a node. When a namespace is available the default resource limit is applied on a container. Specifying memory limits and requests ensures efficiency in a cluster. Minimizing memory request increases the possibility of scheduling. By specifying a higher limit than request you achieve activity bursts when memory is available and ensure memory usage during a burst is reasonable.

In the previous section pod and container, memory allocation was discussed. In the next section, resource allocation to a namespace will be discussed. The memory and CPU resources allocated to a namespace are specified in a ResourceQuota object. The object is created just like a pod by passing the location of the file to kubectl command. An example of a ResourceQuota specification is shown below

apiVersion: v1

kind: ResourceQuota

metadata:

name: namespace-resources

spec:

hard:

requests.cpu: "2"

requests.memory: 2Gi

limits.cpu: "4"

limits.memory: 6Gi

The object we have created above will ensure all the containers in the namespace only use the resources as explained below:

- All the containers need to have a memory and CPU request and limit.

- The memory request made by all containers must be less than 2GB

- The memory limit of all containers must be less than 6GB

- The CPU request made by all containers must be less than 2

- The CPU limit for all containers must be less than 4

When managing a cluster besides memory and CPU it is also important to control storage use. Important aspects of storage are allowed number of persistent volume claims, storage allowed to a claim and cumulative storage in a namespace. A LimitRange enables you to specify the minimum and maximum storage in a namespace. An example of a specification is shown below.

apiVersion: v1

kind: LimitRange

metadata:

name: storagelimits

spec:

limits:

- type: PersistentVolumeClaim

max:

storage: 4Gi

min:

storage: 1Gi

In the specification above, all PersistentVolumeClaims must be between 1 and 4, anything else will be rejected.

The number and cumulative capacity of PVCs is controlled using a ResourceQuota. An example specification is shown below

apiVersion: v1

kind: ResourceQuota

metadata:

name: storagequota

spec:

hard:

persistentvolumeclaims: "8"

requests.storage: "12Gi"

In the specification above any PersistentVolumeClaims above 8 will be rejected and all the PVCs must be less than 12GB.

During pod creation, you have the option of specifying a command and arguments that will be run by containers and it is important to note they cannot be changed after they are created. The command and arguments specified will override those provided by the container image. When arguments are supplied without a command the default command uses the arguments.

apiVersion: v1

kind: Pod

metadata:

name: example-arg

labels:

purpose: show use of command

spec:

containers:

- name: my-container1

image: debian

command: ["printenv"]

args: ["HOSTNAME", "KUBERNETES_PORT"]

restartPolicy: OnFailure

When creating a pod you are able to create container environment variables using env/envFrom. An example is shown in the configuration file below.

apiVersion: v1

kind: Pod

metadata:

name: vars-example

labels:

purpose: explain-vars

spec:

containers:

- name: envar-demo-container

image: gcr.io/google-samples/node-hello:1.0

env:

- name: COURSE

value: "I am learning Kubernetes"

One possible use of environment variables is making the available pod and container fields to a container that is already running.

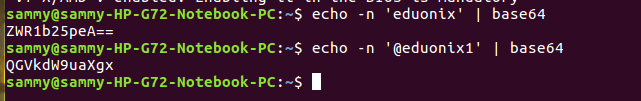

When working with a cluster, there will be frequent need to move around sensitive information like passwords and encryption keys. For example if we have an username eduonix and password @eduonix1 we first need to convert them to base64encoding using the commands below

echo -n 'eduonix' | base64 echo -n '@eduonix1' | base64

After receiving the secret we specify it in a configuration file as shown below.

apiVersion: v1 kind: Secret metadata: name: test-secret data: username: ZWR1b25peA== password: QGVkdW9uaXgx==

To avail our secret data to a pod we specify a configuration file as shown below.

apiVersion: v1

kind: Pod

metadata:

name: secret-test-pod

spec:

containers:

- name: test-container

image: nginx

volumeMounts:

- name: secret-volume

mountPath: /etc/secret-volume

volumes:

- name: secret-volume

secret:

secretName: test-secret

In the configuration file above, we have specified the location where the secrets are stored.

In this tutorial, the roles of heapster and namespaces were explained. Controlling resource use in containers, pods and namespaces was discussed. The resources discussed were CPU, memory and storage.