In machine learning, multiclass or multinomial classification is the problem of classifying instances into one of three or more classes. While many classification algorithms naturally permit the use of more than two classes. These can, however, be turned into multinomial classifiers by a variety of strategies.

In machine learning, multiclass or multinomial classification is the problem of classifying instances into one of three or more classes. While many classification algorithms naturally permit the use of more than two classes. These can, however, be turned into multinomial classifiers by a variety of strategies.

One of the algorithms for solving multiclass classification is softmax regression. To solve a multiclass classification problem, one of the approaches which could be taken is dividing it into multiple binary classification problems. The output could be determined by training binary classifiers equal to the number of classes and the highest output to be chosen as the answer.

The issue with the above approach is that it is inefficient and not scalable computationally if the number of output classes increases. Hence, one of the solutions for this is the Softmax function combined with the Regression method. Using the softmax function, the classes can be represented as vectors with binary values, denoting probability=1 for that class and 0 for all other classes.

Also, the computational output can be determined as a vector of probabilities of a particular input belonging to each class. The difference between probability values can be aggregated as entropy or loss and regression can be applied to train the model.

Consider the iris dataset. The dataset contains 4 input values, length, and width of petals and sepals and output/class values are of 3 types: setosa, versicolor and virginica.

Therefore, here we have classes c=3 with {setosa, versicolor, virginica} and input vectors x = {x1, x2, x3, x4, …} where each x = {len_petal, len_sepal, wid_petal, wid_sepal} as input.

Here, classes {setosa, versicolor, virginica} can be represented as {[1,0,0], [0,1,0], [0,0,1]}.

Lets initialize weights as theta =

[ [t11, t12, t13], [t21, t22, t23], [t31, t32, t33], ]

Softmax Function and Regression

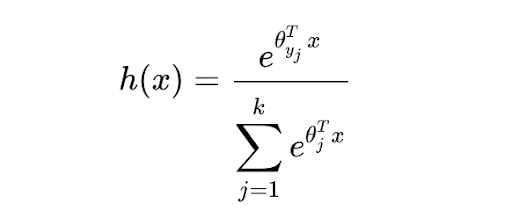

The softmax function takes as input a vector z of K real numbers and normalizes it into a probability distribution consisting of K probabilities. After applying softmax, each component will be in the interval (0,1) and the components will add up to 1, such that they can be interpreted as probabilities.

According to the softmax function, the final output would be Basically, theta transpose X represents the dot product of 2 compatible vectors, an index of the weight matrix with the linear input vector, with the calculated output being a numeric value.

Basically, theta transpose X represents the dot product of 2 compatible vectors, an index of the weight matrix with the linear input vector, with the calculated output being a numeric value.

The numeric values are obtained for each index of the weight matrix to output a linear vector.

The softmax function normalizes all the values from the above theta transpose X dot product such that the final output can be described as a set of probabilities with each value in (0,1) and the sum of all values to be 1.

For example, the iris dataset has 3 classes {setosa, versicolor, virginica} and input values/vector X =

[ x1, x2, x3, x4 ].

Hence the theta or weight matrix would be of type 3 X 4, as it is mapping 4 inputs to 3 output values.

Let theta = [ [t11, t12, t13], [t21, t22, t23], [t31, t32, t33], [t41, t42, t43] ]

Basically, in the theta transpose X, we take each row from the weight matrix and compute dot product with the input vector => (1 X 4).(4 X 1) = (1 X 1) = single value.

Hence, for each row, we get a single numeric value. On that intermediate vector of dimension (1 X 3) in this case. On that intermediate, the softmax logic is applied to normalize all values.

Theta transpose =

[ r1=>[t11, t21, t31, t41], r2=>[t21, t22, t23, t24], r3=>[t31, t32, t33, t34] ]

Hence, theta transpose X = intermediate vector =

[ r1.X (1X4 dot 4X1), r2.X (1X4 dot 4X1), r3.X (1X4 dot 4X1) ]

After softmax formula,

calculated vector =

[ e^r1.X/total, e^r2.X/total, e^r2.X/total, ]

Where total = e^r1.X + e^r2.X + e^r3.X

This calculated output could be compared with the original output of {setosa, versicolor, virginica} or {[1,0,0], [0,1,0], [0,0,1]}.

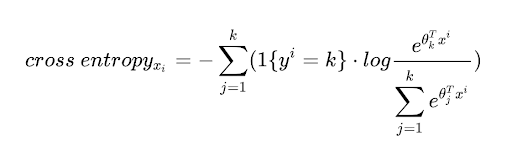

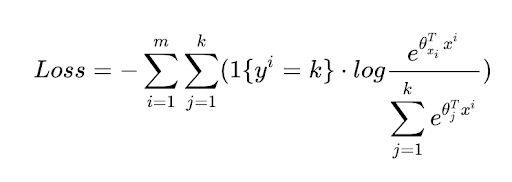

The loss between both values can be calculated and is called cross-entropy. The formula for cross-entropy is:

The total cross-entropy or loss is the sum of all the cross entropies.

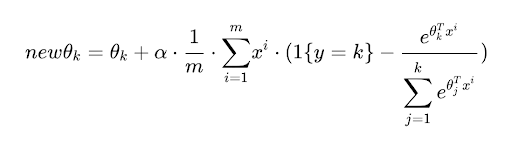

The main objective is to minimize loss. Hence, the theta values need some fine-tuning, hence to train the model, we can use gradient descent.

Using gradient descent, you can calculate the new theta values for weights.

The weight values change as per the output value while training on the dataset. After training, the model is capable of predicting the output label.

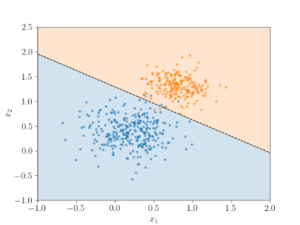

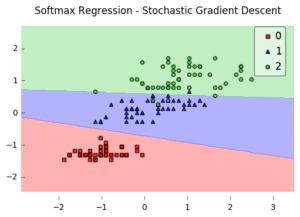

To sum up, softmax regression aims to create boundary lines/areas for each class. The dimension depends on the size of the input vector and the number of output classes. For example, for a use case with 2 inputs and 2 class outputs, the boundaries for each class would look something like this:

The number of inputs denotes the dimensions of the final graph and the number of lines is the number of output classes. Softmax isn’t the only way of solving classification problems. These models work best when the data is linearly separable. When the data is not linearly separable, other methods such as support vector machines, decision trees, and k-nearest neighbors are recommended.