Natural Language Processing (NLP) is a subfield of Artificial Intelligence that allows computers to read, comprehend, and deduce meaning from human languages. It combines linguistics and computer science to analyze language structure guidelines and create models that can understand, break down, and isolate important elements from a text or speech. NLP may be viewed as the process of teaching a computer a human language in the same way that we teach a kid. It has been ingrained in our daily lives since it is used in various technologies such as voice assistance, autocorrecting software, text-to-speech accessibility, sentiment analysis, and many more.

The text must first be converted into a machine-readable format before an NLP model is implemented. As machines cannot directly grasp complicated human languages, preprocessing of the texts is necessary to turn them into forms that the computers can understand. To convert text data into a numerical representation, we employ encoding techniques such as Bag Of Word (BoW), Bi-gram, n-gram, TF-IDF, and Word2Vec. However, some preprocessing of the data is required before applying these encoding techniques. Text Preprocessing includes Tokenization, Stemming, Lemmatization, TFIDF, and a few other stages. This article will cover all these processes along with their examples using the Python programming language.

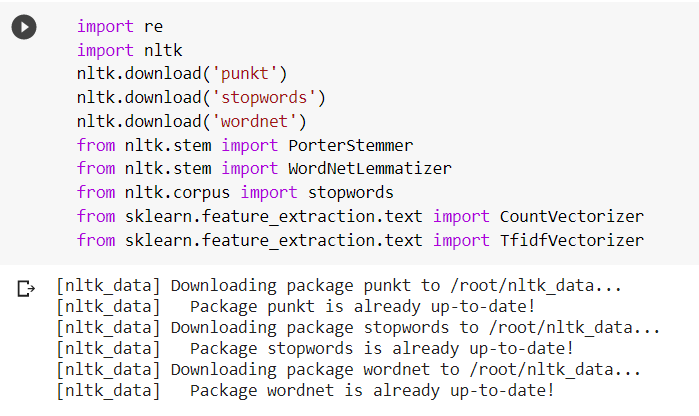

Importing the Required Libraries and The Text For Preprocessing

We need to include some external Python libraries before we can start cleaning the text. The ‘re’ (Regular Expression) library is used to clean up the text by performing various operations on strings.

‘nltk’ is an essential library required for Text Preprocessing. With the help of its built-in methods, this package makes preprocessing tasks like tokenization, lemmatization, and stemming relatively simple.

We must additionally download the Punkt Sentence Tokenizer, Wordnet Lemmatizer, and Stopword after importing the nltk library. The Punkt tokenizer separates a text into a list of sentences by building a model for abbreviated words, collocations, and words that start sentences using an unsupervised method. The Wordnet tokenizer is used for lemmatization operations and the Stopwords tokenizer for removing the stopwords from the text.

The PorterStemmer package allows performing stemming operations.

The Stopwords package allows the removal of stop words from the text.

The CountVectorizer and TfidfVectorizer packages from the sklearn library are used for vectorizing the text data.

For this tutorial, we will use a text paragraph copied from Wikipedia. This text is stored in a variable named ‘txt’.

Tokenization

Tokenization is the process of converting the text into smaller units or segments known as tokens. The tokens can be sentences, words, or subwords (n-gram characters). These tokens aid in the comprehension of the context or the development of the NLP model. By evaluating the sequence of words, tokenization aids in deciphering the meaning of the text.

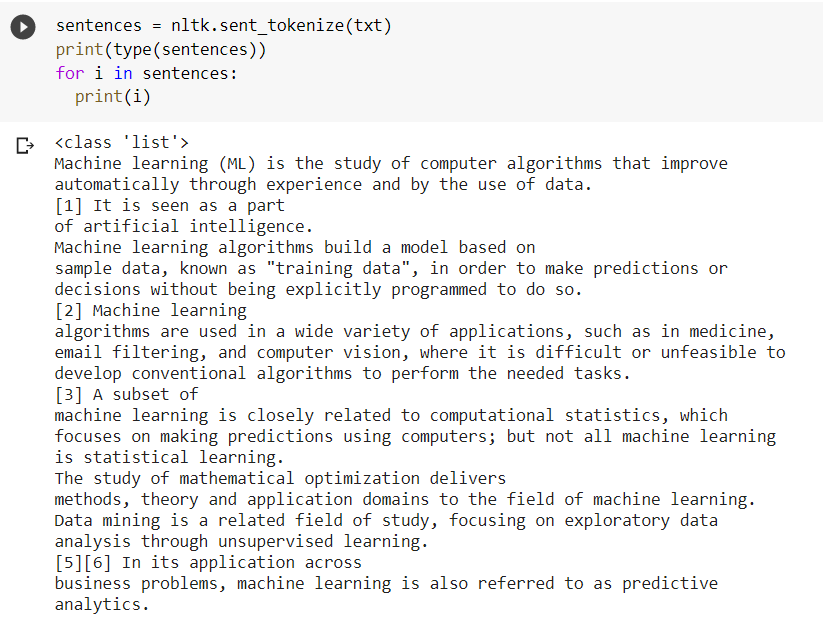

The text is first split into discrete sentences using the sent_tokenize() method. It returns a list whose elements are sentences of the input text.

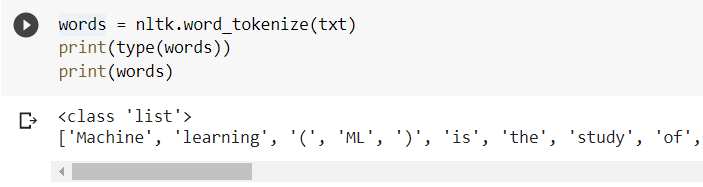

The word_tokenize() function can also be used to split the sentences into individual words if necessary.

The word_tokenize() function can also be used to split the sentences into individual words if necessary.

The text has now been divided into discrete sentences or words, allowing for further processing operations.

Stemming, Lemmatization and Removal of Stop Words

In the discipline of Natural Language Processing, stemming and lemmatization are text normalization procedures that are used to prepare text, words, and documents for further processing. Stemming is the process of reducing inflected words to their word stem. In natural language processing, stemming is an integral aspect of the pipelining process. For example, the words “final”, “Finally” and “Finalize” is stemmed to the word “Fina”.

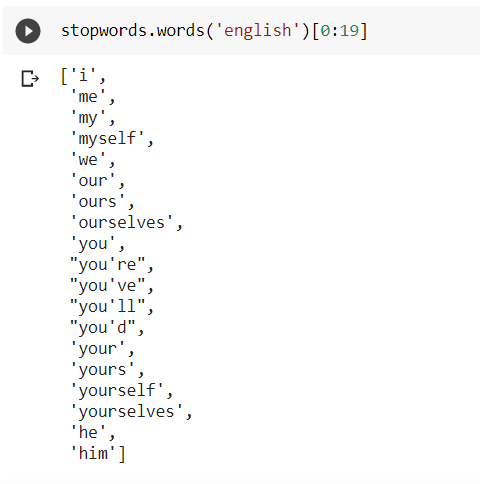

We will eliminate the stopwords from the text as well as stemming it. Stopwords are words that do not contribute much sense to sentences (e.g., “the,” “am,” “was,” and so on). These words can be avoided while developing any NLP model. The following code snippet shows the first 20 stopwords in the English language.

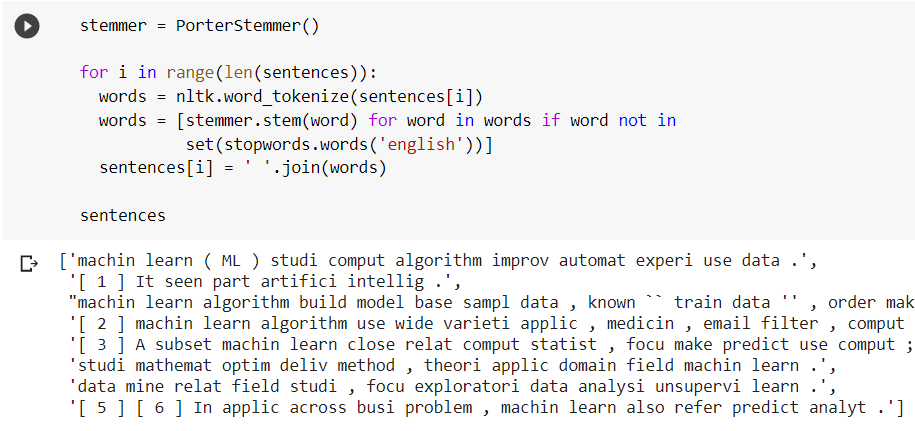

We will use a for loop to stem the text while deleting stopwords from the sentences. The for loop examines the text for stopwords and deletes them while stemming the words that are not stopwords.

The iterator – word takes each element from the list – words and stems them if they are not stopwords; otherwise, it discards them.

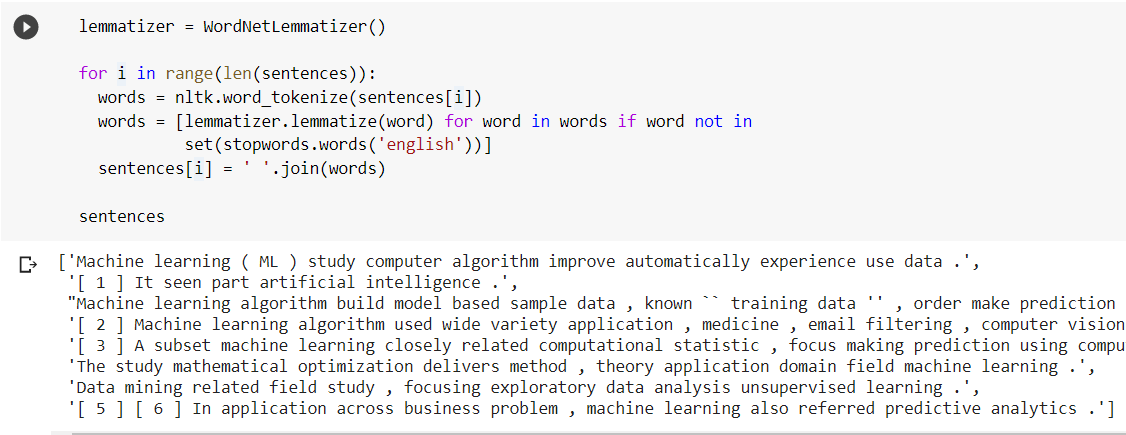

All of the words in the list- sentences have now been stemmed, and the stopwords have been eliminated. However, as can be seen, the stemmed words have no proper meaning. For example, the word ‘machine’ was converted to the word ‘machin,’ while the word ‘artificial’ was converted to the word ‘artifici.’ This might be viewed as a disadvantage of stemming. Lemmatization tackles this problem by converting the inflicted words into shorter, more meaningful ones. Lemmatization works in a similar way to stemming in that it reduces words to their stem words or shorter forms while maintaining their meaning.

Although lemmatization has reduced the words to smaller ones, they nevertheless have correct meanings.

Bag of words in NLP

We cannot supply the text directly as input since computers cannot interpret language. Before providing the words to the computer, they must be transformed into vectors. This process may be referred to as feature extraction. The Bag of Words (BoW) is a quite common feature extraction technique. In this technique, we look at the histogram of the words present in the text, using each word count as a feature. Before we proceed, we first need to lower all the words as an uppercase and a lowercase word, though they may be the same words, might be considered as two different words.

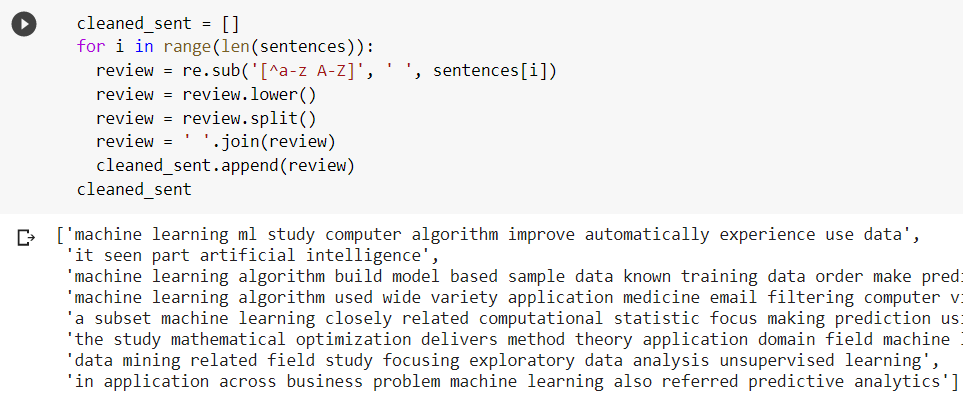

We clean the lemmatized text before converting it to vectors by eliminating any punctuation and special symbols. The sub() method from the ‘re’ library replaces all the characters except alphabets with blank spaces. In addition, the lower() function lowers the case of all the words. These cleaned sentences are stored in the ‘cleaned_sent’ list.

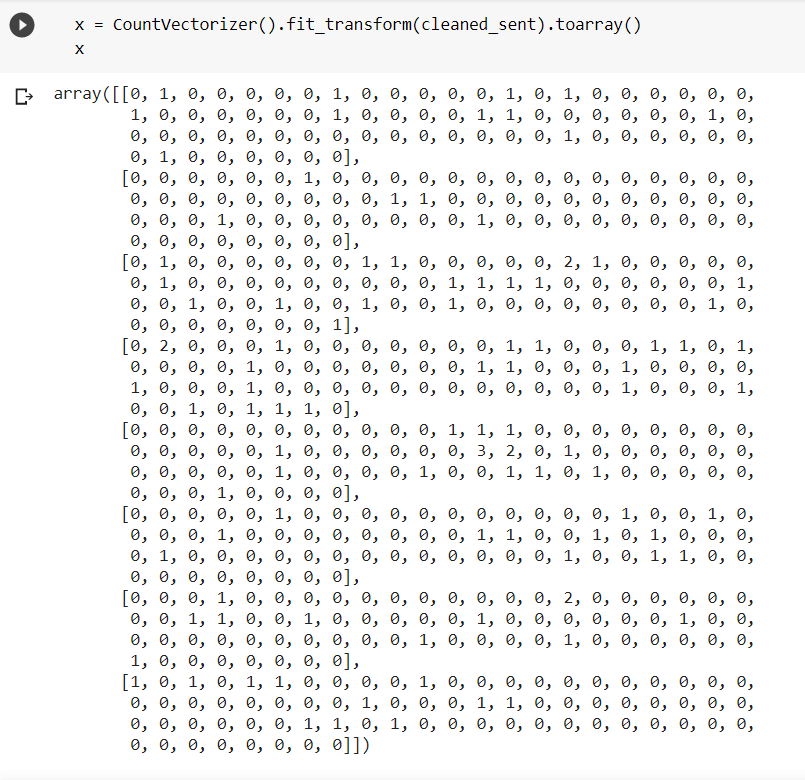

Now the next step is creating the BoW. For this, we will use the fit_transform() method from the ‘sklearn’ library.

We have now converted the text into an array or vector format, which is ready to be used for machine learning algorithms.

TFIDF Technique for Text-to-Vector Conversion

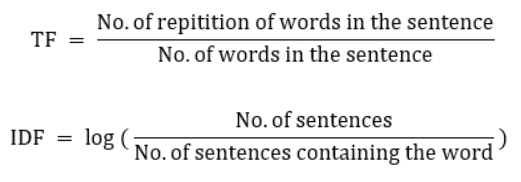

Although the BoW approach is simple to use, it does not specify the significance of words in sentences because it only displays whether or not a word is present. The TFIDF (Term Frequency and Inverse Document Frequency) method, on the other hand, defines the significance of each word in the sentence, giving it an edge over BoW for training NLP models.

The TFIDF technique involves two terms – Term Frequency (TF) and Inverse Document Frequency (IDF). Each word in the text has its TF and IDF score, and the product of these two scores gives the weight or importance of that specific word. The mathematical expressions for calculating TF and IDF scores are given below.

We utilize the TfidfVectorizer() function from the ‘Scikitlearn’ package to turn the lemmatized text into a vector.

This vector not only indicates the presence of the words in a sentence but also associates weights to those words indicating their importance.

Other Convenient Ways to Convert Words into Vectors

We have seen two simple and effective methods (BoW and TFIDF) for converting text data to a vector representation. There are also many convenient and efficient methods for converting words into vectors, such as one-hot encoding, n-gram, Point Mutual Information (PMI), Hashing Vectorizer, utilizing python modules like Word2Vec, Gensim, Spacy, and others. These external libraries have many built-in functions which make the word to vector conversion very convenient and efficient.

Also Read: An introduction to Natural Language Processing using Natural Language ToolKit NLTK