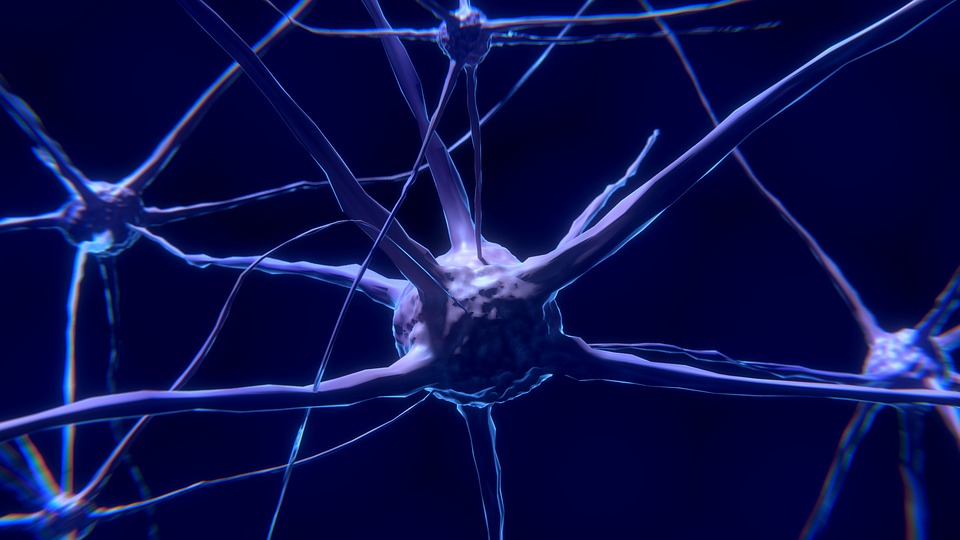

Nature inspires the inception of most of the artificial boons we relish in today. The Neural Network (a subset of deep learning) is no exception.

The connection of neurons in the animal body served as the foundational model for this construction. In the body, information is transported through neurons creating a stimulus and consequentially causing movement of muscles.

Many people assume that the idea of a Neural Network is comparatively fresh. However, the truth might not be that accurate.

The prefatory foundation was introduced as early as the late 1800s.

Preliminary Theories

Alexander Bain was the first mind to present a study where he believed that repetitive actions contribute to the building of memory. He was inclined to the idea that repeated activities strengthened the connection between those neurons. Bain was a Scottish educationist and a philosopher with multi-faceted majors like Ethics, linguistics, logic and education reforms. The scientific community was skeptical in of his theories at the time.

William James was the first man to start courses in psychology in the US. Being one of the leading thinkers of the nineteenth century, it makes sense he would have a shot to comprehend the immensity of a human brain. At 48, he presented his conclusion which was almost identical to that of Bains however he maintained that memories and actions resulted from electrical currents that flow among the neurons within the brain.

C.S. Sherrington, later attempted tests to verify Jame’s theory in 1898. He did that by running electrical signals through the spinal cord of rats. But instead of getting stronger, the electric current strength kept on lowering with the number of attempts made. This work was later helpful in the discovery of habituation concept.

The genesis of Neural networks

Warren Sturgis McCulloch and Walter Pitts Jr. created a computational model for their research on neural networks based on several algorithms which they named as threshold logic. In 1943, they published their paper “A Logical Calculus of Ideas Immanent in Nervous Activity“ where they proposed that with a combination of numbers and algorithms, machines can mimic human behavior.

This was a significant step in the understanding of neural networks as it split the studies in two. One dealt with the biological neural network (living beings) while the other was about a neural network in machines that would make it think as a human would.

Donald Hebb, an extraordinary psychologist, in the 1940s gave us the Hebbian Learning, which was one of the first typical unsupervised learning. These were later applied to the computational models in 1948 with the help of Turing’s B type machines.

Alan Turing, the British mathematician best known for breaking the codes of the German forces, said machines could be trained to work like humans. In 1947, he predicted that machine learning could work and grow into a field where they could reduce the laborious tasks of people, causing a huge impact on jobs.

In his paper, “Computing Machinery and Intelligence” he proposed whether a machine can think. He called this question as The Imitation Game though many called it the Turing test later. The test entailed a conversation carried by the machine where it would try to communicate with another human being through text. Within 5 minutes if the person using it would believe that she or he was talking to a human being, the test would be successful, though that didn’t happen till the next 60 years.

Artificial Intelligence finally brought to life

Arthur Samuel was one of the most prized pioneers in artificial intelligence. He also played a key role in evolving the computer gaming industry. In 1959, he used the phrase “Machine Learning” for the first time.

He joined the IBM laboratories in Poughkeepsie during 1949 and started his project on the first ever software hash tables. He also assisted the early research in using transistors for computers.

He also later developed a checker-playing computer game that was the first ever self-training programs and an example of artificial intelligence made into a reality.

Rosenblatt, in 1958, created Perceptron which is an algorithm based on a two-layer learning computer network using simple addition and subtraction for pattern recognition. He submitted a well-documented paper called “The Perceptron: A Perceiving and Recognizing Automaton”. He’s often credited with vitalizing the foundations of Deep Neural Networks (DNN).

David H. Hubel and Torsten Wiesel’s Artificial Neural Network. These two Noble Laureates and Neurophysiologists discovered two types of cells in the primary visual cortex; Simple cells and Complex Cells. Though not directly, these biological interpretations of the human neural network helped pave the way for Artificial Neural Networks.

Alexey Ivakhnenko and V.G. Lapa (Ivakhnenko’s associate) in 1956, using the theories present at the time, gave birth to the first deep learning networks of that time. Ivakhnenko played a key role in developing the GMDH (Group Method of Data Handling) and applied it to Neural Networks. Perhaps, that is the reason why he is known as the father of modern deep learning.

In 1971, Ivakhnenko an 8 layered deep network and also successfully displayed a computer’s learning process in a computer identification system called Alpha.

Visual Pattern Learning successfully identified by an ANN

Kunihiko Fukushima is known widely for the creation of Neocognitron (an artificial neural network used to recognize and decipher visual patterns). His works led to the birth of the first convoluted neural networks by the start of 1980s. The work was highly influenced by Hubel and Weisel’s findings which is why Fukushima’s work was based on the visual cortex organization in animals.

John Hopfield in 1982, created a system that now is called his name- Associate Neural Networks. Now it is known as the Hopfield Network.

Hopfield networks are a form of recurrent neural network that works with the binary threshold notes and serves the content associative memory systems. They still continue to be a popular tool in comprehending Deep Learning in the 21st century.

Neural Network Anglicized

Terry Sejnowski works as a professor at the Salk Institute for Biological Studies. He directs the computational neurobiology laboratory along with being the director of Cricks-Jacob Center for Theoretical and Computational Biology.

In 1985, he created the first English-speaking program that can pronounce English words and alphabets like how a child does. It was called NETalk and the program can train itself and improve over time to speak other English words. It can convert text to speech.

He pioneered the research in Neural Networks and Computational Neuroscience.

Shape Recognition and Word prediction

David Rumelhart, Geoffrey Hinton, and Ronald J. Williams together created a paper “Learning Representations by Back-propagating Errors” in 1986 where they described the process of backpropogation with a lot of details.

Their paper displayed how incredibly helpful it could improve existing neural networks for such as predicting speech or recognizing visuals even better. Hinton, out of the three, kept his research up during the second AI winter. Later, he was awarded for his work on artificial brains. Many consider him the Godfather of deep learning.

Christopher Watkins (Chris Watkins) submitted a paper for his PhD at Kings College titled “Learning from Delayed Rewards” in 1989. In it, he talks about Q-Learning, which improves the reinforcement learning in machines, in terms of practicality and feasibility.

The proposition of Long Short Term Memory

Jürgen Schmidhuber and Sepp Hochreiter together proposed a recurrent neural network framework called Long-Short term Memory which sought to effectively improve the practical usage of RNN. This was accomplished by eliminating the long-term dependency program. It turns out that LSTM (Long Short Term Memory) can remember that information for a longer time.

After being improved continuously, they are used often in Deep Learning Circles and Google uses it in its Android products as Speech recognition software.

Contributions of LeCun

Yann LeCun is designated as the AI Chief Scientist at Facebook.

One of his greatest contributions in the field of Neural Networks is his amalgamation of CNN (convolutional neural networks) with the backpropagation theories of the time to decrypt handwritten digits in 1989. The system was later applied in checking handwritten cheques in the US.

In 1998, he published a paper on “Gradient-Based Learning Applied to Document Recognition”. The gradient-based learning or the Stochastic Gradient Descent algorithm along with the backpropogation algorithm is the most successful approach to deep learning.

ImageNet

Fei-Fiel Li, in 2009, launched ImageNet. Li is a professor and head of the AI lab at Stanford University.

ImageNet is a dataset of images organized based on the WordNet hierarchy. Every word present in Wordnet is described with a surplus amount of words or word phrases. They are called a “synset” or “synonym set”. There are more than 100,000 synsets, most of which are nouns that take around 80,000 of them.

As of 2017, ImageNet has become a large database that encompasses 14 million labeled images available to students and researchers.

AlexNet

Alex Krizhevsky worked upon the existing LeNet 5, built by Yann LeCun in 1998, and improved it.

In 2012, his program outperformed all the other prior competitors greatly and won by reducing the top-5 error from 26% to 15.3%.

This network had many features identical to LeNet. However, it is comparatively deeper and has more filters per layer. AlexNet was designed by Krizhevsky, Hinton and Ilya Sutskever, under the SuperVison Group.

YouTube CAT Experiment

Google in 2012, using neural networks from over thousands of systems, the team presented 10 million images taken randomly from YouTube and allowed it to run the analysis on it.

This was an unsupervised learning process and upon its conclusion, the system already knew of the cat images present in the collection. The system with no outside help learned how to identify cats on images. It performed really well, about 70% compared to the previous attempts.

AI recognized only 15% of the total images however, they consider it as another fundamental step towards developing an advanced form of AI.

Face Recognition accuracy

Facebook, in 2014 released DeepFace, which was its mammoth deep learning application. It uses neural networks to identify faces with 97.35% accuracy. The previous attempts had an improvement rate of only 27%.

This was revolutionary because we humans have an accuracy of 97.5%, so Facebook brought it very close.

Now, Google Images employ a program similar to that of Deepface.

The immensity of new findings and developing new applications is ever greater today. Making human tasks easier is one reason monster platforms like Amazon, Google, Apple, etc are pouring their resources in the AI experiments.

Intelligence coalesce with metal is a wonderful sight to behold and there are more outlandish realities waiting around the corner to stumble upon.